Note

Click here to download the full example code or to run this example in your browser via Binder

05. From MEG sensor-space data to HNN simulation¶

This example demonstrates how to calculate an inverse solution of the median nerve evoked response potential (ERP) in S1 from the MNE somatosensory dataset, and then simulate a biophysical model network that reproduces the observed dynamics. Note that we do not expound on how we came up with the sequence of evoked drives used in this example, rather, we only demonstrate its implementation. For those who want more background on the HNN model and the process used to articulate the proximal and distal drives needed to simulate evoked responses, see the HNN ERP tutorial. The sequence of evoked drives presented here is not part of a current publication but is motivated by prior studies 1, 2.

# Authors: Mainak Jas <mainakjas@gmail.com>

# Ryan Thorpe <ryan_thorpe@brown.edu>

# sphinx_gallery_thumbnail_number = 2

First, we will import the packages needed for computing the inverse solution

from the MNE somatosensory dataset. MNE can be installed with

pip install mne, and the somatosensory dataset can be downloaded by

importing somato from mne.datasets.

import os.path as op

import matplotlib.pyplot as plt

import mne

from mne.datasets import somato

from mne.minimum_norm import apply_inverse, make_inverse_operator

Now we set the the path of the somato dataset for subject '01'.

data_path = somato.data_path()

subject = '01'

task = 'somato'

raw_fname = op.join(data_path, 'sub-{}'.format(subject), 'meg',

'sub-{}_task-{}_meg.fif'.format(subject, task))

fwd_fname = op.join(data_path, 'derivatives', 'sub-{}'.format(subject),

'sub-{}_task-{}-fwd.fif'.format(subject, task))

subjects_dir = op.join(data_path, 'derivatives', 'freesurfer', 'subjects')

Out:

Using default location ~/mne_data for somato...

Creating ~/mne_data

Downloading archive MNE-somato-data.tar.gz to /home/circleci/mne_data

Downloading https://files.osf.io/v1/resources/rxvq7/providers/osfstorage/59c0e2849ad5a1025d4b7346?version=7&action=download&direct (582.2 MB)

0%| | Downloading : 0.00/582M [00:00<?, ?B/s]

0%| | Downloading : 0.99M/582M [00:00<00:15, 40.5MB/s]

1%| | Downloading : 4.49M/582M [00:00<00:14, 41.8MB/s]

1%|1 | Downloading : 6.49M/582M [00:00<00:13, 43.2MB/s]

1%|1 | Downloading : 8.49M/582M [00:00<00:13, 44.5MB/s]

2%|1 | Downloading : 10.5M/582M [00:00<00:13, 46.0MB/s]

2%|2 | Downloading : 12.5M/582M [00:00<00:12, 47.5MB/s]

3%|2 | Downloading : 16.5M/582M [00:00<00:12, 49.1MB/s]

3%|3 | Downloading : 18.5M/582M [00:00<00:11, 50.0MB/s]

4%|3 | Downloading : 20.5M/582M [00:00<00:11, 51.4MB/s]

4%|3 | Downloading : 22.5M/582M [00:00<00:11, 51.7MB/s]

4%|4 | Downloading : 24.5M/582M [00:00<00:11, 52.6MB/s]

5%|4 | Downloading : 26.5M/582M [00:00<00:10, 53.5MB/s]

5%|4 | Downloading : 28.5M/582M [00:00<00:10, 54.5MB/s]

5%|5 | Downloading : 30.5M/582M [00:00<00:10, 54.7MB/s]

6%|5 | Downloading : 32.5M/582M [00:00<00:10, 54.1MB/s]

6%|5 | Downloading : 34.5M/582M [00:00<00:10, 54.4MB/s]

6%|6 | Downloading : 36.5M/582M [00:00<00:10, 55.8MB/s]

7%|6 | Downloading : 38.5M/582M [00:00<00:10, 56.7MB/s]

7%|6 | Downloading : 40.5M/582M [00:00<00:09, 57.1MB/s]

7%|7 | Downloading : 42.5M/582M [00:00<00:09, 57.8MB/s]

8%|7 | Downloading : 44.5M/582M [00:00<00:09, 57.6MB/s]

8%|7 | Downloading : 46.5M/582M [00:00<00:09, 58.2MB/s]

8%|8 | Downloading : 48.5M/582M [00:00<00:09, 59.5MB/s]

9%|8 | Downloading : 50.5M/582M [00:00<00:09, 61.0MB/s]

9%|9 | Downloading : 52.5M/582M [00:00<00:08, 62.0MB/s]

9%|9 | Downloading : 54.5M/582M [00:00<00:08, 63.4MB/s]

10%|9 | Downloading : 56.5M/582M [00:00<00:08, 64.4MB/s]

10%|# | Downloading : 58.5M/582M [00:00<00:08, 65.5MB/s]

10%|# | Downloading : 60.5M/582M [00:00<00:08, 66.9MB/s]

11%|# | Downloading : 62.5M/582M [00:00<00:08, 67.9MB/s]

11%|#1 | Downloading : 64.5M/582M [00:00<00:07, 69.1MB/s]

11%|#1 | Downloading : 66.5M/582M [00:00<00:07, 70.4MB/s]

12%|#1 | Downloading : 68.5M/582M [00:00<00:07, 71.3MB/s]

12%|#2 | Downloading : 70.5M/582M [00:00<00:07, 72.3MB/s]

12%|#2 | Downloading : 72.5M/582M [00:00<00:07, 73.5MB/s]

13%|#2 | Downloading : 74.5M/582M [00:00<00:07, 74.4MB/s]

13%|#3 | Downloading : 76.5M/582M [00:00<00:07, 75.4MB/s]

13%|#3 | Downloading : 78.5M/582M [00:00<00:06, 76.6MB/s]

14%|#3 | Downloading : 80.5M/582M [00:00<00:06, 78.0MB/s]

14%|#4 | Downloading : 82.5M/582M [00:00<00:06, 79.5MB/s]

15%|#4 | Downloading : 84.5M/582M [00:00<00:06, 80.8MB/s]

15%|#5 | Downloading : 88.5M/582M [00:01<00:06, 82.7MB/s]

16%|#6 | Downloading : 94.5M/582M [00:01<00:05, 85.3MB/s]

17%|#6 | Downloading : 98.5M/582M [00:01<00:05, 87.5MB/s]

18%|#7 | Downloading : 102M/582M [00:01<00:05, 89.5MB/s]

18%|#8 | Downloading : 106M/582M [00:01<00:05, 91.8MB/s]

19%|#8 | Downloading : 110M/582M [00:01<00:05, 93.5MB/s]

20%|#9 | Downloading : 114M/582M [00:01<00:05, 95.2MB/s]

20%|## | Downloading : 118M/582M [00:01<00:04, 97.5MB/s]

21%|##1 | Downloading : 122M/582M [00:01<00:04, 99.7MB/s]

22%|##1 | Downloading : 126M/582M [00:01<00:04, 102MB/s]

22%|##2 | Downloading : 130M/582M [00:01<00:04, 104MB/s]

23%|##3 | Downloading : 134M/582M [00:01<00:04, 107MB/s]

24%|##3 | Downloading : 138M/582M [00:01<00:04, 109MB/s]

24%|##4 | Downloading : 142M/582M [00:01<00:04, 109MB/s]

25%|##5 | Downloading : 146M/582M [00:01<00:04, 110MB/s]

26%|##5 | Downloading : 150M/582M [00:01<00:04, 109MB/s]

27%|##6 | Downloading : 154M/582M [00:01<00:04, 111MB/s]

27%|##7 | Downloading : 158M/582M [00:01<00:03, 113MB/s]

28%|##7 | Downloading : 162M/582M [00:01<00:03, 115MB/s]

29%|##8 | Downloading : 166M/582M [00:01<00:03, 117MB/s]

29%|##9 | Downloading : 170M/582M [00:01<00:03, 119MB/s]

30%|##9 | Downloading : 174M/582M [00:01<00:03, 120MB/s]

31%|### | Downloading : 178M/582M [00:01<00:03, 122MB/s]

31%|###1 | Downloading : 182M/582M [00:01<00:03, 122MB/s]

32%|###2 | Downloading : 186M/582M [00:01<00:03, 123MB/s]

33%|###2 | Downloading : 190M/582M [00:01<00:03, 113MB/s]

33%|###3 | Downloading : 194M/582M [00:01<00:03, 103MB/s]

34%|###3 | Downloading : 196M/582M [00:01<00:04, 92.0MB/s]

34%|###4 | Downloading : 198M/582M [00:01<00:04, 89.2MB/s]

34%|###4 | Downloading : 200M/582M [00:02<00:04, 87.3MB/s]

35%|###4 | Downloading : 202M/582M [00:02<00:05, 79.5MB/s]

35%|###5 | Downloading : 204M/582M [00:02<00:05, 77.8MB/s]

35%|###5 | Downloading : 206M/582M [00:02<00:05, 76.8MB/s]

36%|###5 | Downloading : 208M/582M [00:02<00:05, 74.1MB/s]

36%|###6 | Downloading : 210M/582M [00:02<00:05, 72.0MB/s]

36%|###6 | Downloading : 212M/582M [00:02<00:05, 71.8MB/s]

37%|###6 | Downloading : 214M/582M [00:02<00:05, 72.5MB/s]

37%|###7 | Downloading : 216M/582M [00:02<00:05, 72.6MB/s]

38%|###7 | Downloading : 218M/582M [00:02<00:05, 74.1MB/s]

38%|###7 | Downloading : 220M/582M [00:02<00:05, 74.6MB/s]

38%|###8 | Downloading : 222M/582M [00:02<00:04, 75.5MB/s]

39%|###8 | Downloading : 224M/582M [00:02<00:04, 76.8MB/s]

39%|###8 | Downloading : 226M/582M [00:02<00:04, 78.0MB/s]

39%|###9 | Downloading : 228M/582M [00:02<00:04, 78.3MB/s]

40%|###9 | Downloading : 230M/582M [00:02<00:04, 79.3MB/s]

40%|#### | Downloading : 234M/582M [00:02<00:04, 81.3MB/s]

41%|#### | Downloading : 236M/582M [00:02<00:04, 82.9MB/s]

41%|#### | Downloading : 238M/582M [00:02<00:04, 84.4MB/s]

42%|####1 | Downloading : 242M/582M [00:02<00:04, 86.4MB/s]

42%|####2 | Downloading : 246M/582M [00:02<00:03, 88.9MB/s]

43%|####3 | Downloading : 250M/582M [00:02<00:03, 91.3MB/s]

44%|####3 | Downloading : 254M/582M [00:02<00:03, 93.8MB/s]

44%|####4 | Downloading : 258M/582M [00:02<00:03, 96.0MB/s]

45%|####5 | Downloading : 262M/582M [00:02<00:03, 98.5MB/s]

46%|####5 | Downloading : 266M/582M [00:02<00:03, 101MB/s]

46%|####6 | Downloading : 270M/582M [00:02<00:03, 103MB/s]

47%|####7 | Downloading : 274M/582M [00:02<00:03, 105MB/s]

48%|####7 | Downloading : 278M/582M [00:02<00:02, 107MB/s]

49%|####8 | Downloading : 282M/582M [00:02<00:02, 110MB/s]

49%|####9 | Downloading : 286M/582M [00:02<00:02, 111MB/s]

50%|####9 | Downloading : 290M/582M [00:02<00:02, 113MB/s]

51%|##### | Downloading : 294M/582M [00:02<00:02, 115MB/s]

51%|#####1 | Downloading : 298M/582M [00:02<00:02, 118MB/s]

52%|#####1 | Downloading : 302M/582M [00:02<00:02, 120MB/s]

53%|#####2 | Downloading : 306M/582M [00:02<00:02, 122MB/s]

53%|#####3 | Downloading : 310M/582M [00:02<00:02, 124MB/s]

54%|#####4 | Downloading : 314M/582M [00:02<00:02, 125MB/s]

55%|#####4 | Downloading : 318M/582M [00:03<00:02, 127MB/s]

55%|#####5 | Downloading : 322M/582M [00:03<00:02, 130MB/s]

56%|#####6 | Downloading : 326M/582M [00:03<00:02, 131MB/s]

57%|#####6 | Downloading : 330M/582M [00:03<00:01, 134MB/s]

57%|#####7 | Downloading : 334M/582M [00:03<00:01, 135MB/s]

58%|#####8 | Downloading : 338M/582M [00:03<00:01, 138MB/s]

59%|#####8 | Downloading : 342M/582M [00:03<00:01, 140MB/s]

60%|#####9 | Downloading : 346M/582M [00:03<00:01, 142MB/s]

60%|###### | Downloading : 350M/582M [00:03<00:01, 143MB/s]

61%|###### | Downloading : 354M/582M [00:03<00:01, 146MB/s]

62%|######1 | Downloading : 358M/582M [00:03<00:01, 148MB/s]

62%|######2 | Downloading : 362M/582M [00:03<00:01, 149MB/s]

63%|######2 | Downloading : 366M/582M [00:03<00:01, 150MB/s]

64%|######3 | Downloading : 370M/582M [00:03<00:01, 150MB/s]

64%|######4 | Downloading : 374M/582M [00:03<00:01, 152MB/s]

65%|######5 | Downloading : 378M/582M [00:03<00:01, 153MB/s]

66%|######5 | Downloading : 382M/582M [00:03<00:01, 154MB/s]

66%|######6 | Downloading : 386M/582M [00:03<00:01, 156MB/s]

67%|######7 | Downloading : 390M/582M [00:03<00:01, 143MB/s]

68%|######7 | Downloading : 394M/582M [00:03<00:01, 144MB/s]

68%|######8 | Downloading : 398M/582M [00:03<00:01, 144MB/s]

69%|######9 | Downloading : 402M/582M [00:03<00:01, 135MB/s]

70%|######9 | Downloading : 406M/582M [00:03<00:01, 132MB/s]

71%|####### | Downloading : 410M/582M [00:03<00:01, 130MB/s]

71%|#######1 | Downloading : 414M/582M [00:03<00:01, 129MB/s]

72%|#######1 | Downloading : 418M/582M [00:03<00:01, 125MB/s]

73%|#######2 | Downloading : 422M/582M [00:03<00:01, 126MB/s]

73%|#######3 | Downloading : 426M/582M [00:03<00:01, 127MB/s]

74%|#######3 | Downloading : 430M/582M [00:03<00:01, 128MB/s]

75%|#######4 | Downloading : 434M/582M [00:03<00:01, 130MB/s]

75%|#######5 | Downloading : 438M/582M [00:03<00:01, 131MB/s]

76%|#######6 | Downloading : 442M/582M [00:03<00:01, 131MB/s]

77%|#######6 | Downloading : 446M/582M [00:03<00:01, 134MB/s]

77%|#######7 | Downloading : 450M/582M [00:03<00:01, 136MB/s]

78%|#######8 | Downloading : 454M/582M [00:04<00:00, 136MB/s]

79%|#######8 | Downloading : 458M/582M [00:04<00:00, 138MB/s]

79%|#######9 | Downloading : 462M/582M [00:04<00:00, 140MB/s]

80%|######## | Downloading : 466M/582M [00:04<00:00, 142MB/s]

81%|######## | Downloading : 470M/582M [00:04<00:00, 142MB/s]

81%|########1 | Downloading : 474M/582M [00:04<00:00, 145MB/s]

82%|########2 | Downloading : 478M/582M [00:04<00:00, 146MB/s]

83%|########2 | Downloading : 482M/582M [00:04<00:00, 147MB/s]

84%|########3 | Downloading : 486M/582M [00:04<00:00, 148MB/s]

84%|########4 | Downloading : 490M/582M [00:04<00:00, 149MB/s]

85%|########4 | Downloading : 494M/582M [00:04<00:00, 149MB/s]

86%|########5 | Downloading : 498M/582M [00:04<00:00, 149MB/s]

86%|########6 | Downloading : 502M/582M [00:04<00:00, 150MB/s]

87%|########6 | Downloading : 506M/582M [00:04<00:00, 148MB/s]

88%|########7 | Downloading : 510M/582M [00:04<00:00, 147MB/s]

88%|########8 | Downloading : 514M/582M [00:04<00:00, 149MB/s]

89%|########9 | Downloading : 518M/582M [00:04<00:00, 150MB/s]

90%|########9 | Downloading : 522M/582M [00:04<00:00, 150MB/s]

90%|######### | Downloading : 526M/582M [00:04<00:00, 150MB/s]

91%|#########1| Downloading : 530M/582M [00:04<00:00, 150MB/s]

92%|#########1| Downloading : 534M/582M [00:04<00:00, 150MB/s]

92%|#########2| Downloading : 538M/582M [00:04<00:00, 152MB/s]

93%|#########3| Downloading : 542M/582M [00:04<00:00, 152MB/s]

94%|#########3| Downloading : 546M/582M [00:04<00:00, 153MB/s]

95%|#########4| Downloading : 550M/582M [00:04<00:00, 154MB/s]

95%|#########5| Downloading : 554M/582M [00:04<00:00, 156MB/s]

96%|#########5| Downloading : 558M/582M [00:04<00:00, 156MB/s]

97%|#########6| Downloading : 562M/582M [00:04<00:00, 156MB/s]

97%|#########7| Downloading : 566M/582M [00:04<00:00, 157MB/s]

98%|#########7| Downloading : 570M/582M [00:04<00:00, 159MB/s]

99%|#########8| Downloading : 574M/582M [00:04<00:00, 160MB/s]

99%|#########9| Downloading : 578M/582M [00:04<00:00, 161MB/s]

100%|##########| Downloading : 582M/582M [00:04<00:00, 161MB/s]

100%|##########| Downloading : 582M/582M [00:04<00:00, 127MB/s]

Verifying hash 32fd2f6c8c7eb0784a1de6435273c48b.

Decompressing the archive: /home/circleci/mne_data/MNE-somato-data.tar.gz

(please be patient, this can take some time)

Successfully extracted to: ['/home/circleci/mne_data/MNE-somato-data']

Attempting to create new mne-python configuration file:

/home/circleci/.mne/mne-python.json

Then, we load the raw data and estimate the inverse operator.

# Read and band-pass filter the raw data

raw = mne.io.read_raw_fif(raw_fname, preload=True)

l_freq, h_freq = 1, 40

raw.filter(l_freq, h_freq)

# Identify stimulus events associated with MEG time series in the dataset

events = mne.find_events(raw, stim_channel='STI 014')

# Define epochs within the time series

event_id, tmin, tmax = 1, -.2, .17

baseline = None

epochs = mne.Epochs(raw, events, event_id, tmin, tmax, baseline=baseline,

reject=dict(grad=4000e-13, eog=350e-6), preload=True)

# Compute the inverse operator

fwd = mne.read_forward_solution(fwd_fname)

cov = mne.compute_covariance(epochs)

inv = make_inverse_operator(epochs.info, fwd, cov)

Out:

Opening raw data file /home/circleci/mne_data/MNE-somato-data/sub-01/meg/sub-01_task-somato_meg.fif...

Range : 237600 ... 506999 = 791.189 ... 1688.266 secs

Ready.

Reading 0 ... 269399 = 0.000 ... 897.077 secs...

Filtering raw data in 1 contiguous segment

Setting up band-pass filter from 1 - 40 Hz

FIR filter parameters

---------------------

Designing a one-pass, zero-phase, non-causal bandpass filter:

- Windowed time-domain design (firwin) method

- Hamming window with 0.0194 passband ripple and 53 dB stopband attenuation

- Lower passband edge: 1.00

- Lower transition bandwidth: 1.00 Hz (-6 dB cutoff frequency: 0.50 Hz)

- Upper passband edge: 40.00 Hz

- Upper transition bandwidth: 10.00 Hz (-6 dB cutoff frequency: 45.00 Hz)

- Filter length: 993 samples (3.307 sec)

111 events found

Event IDs: [1]

Not setting metadata

Not setting metadata

111 matching events found

No baseline correction applied

0 projection items activated

Loading data for 111 events and 112 original time points ...

0 bad epochs dropped

Reading forward solution from /home/circleci/mne_data/MNE-somato-data/derivatives/sub-01/sub-01_task-somato-fwd.fif...

Reading a source space...

[done]

Reading a source space...

[done]

2 source spaces read

Desired named matrix (kind = 3523) not available

Read MEG forward solution (8155 sources, 306 channels, free orientations)

Source spaces transformed to the forward solution coordinate frame

Computing rank from data with rank=None

Using tolerance 1.5e-08 (2.2e-16 eps * 306 dim * 2.2e+05 max singular value)

Estimated rank (mag + grad): 306

MEG: rank 306 computed from 306 data channels with 0 projectors

/home/circleci/project/examples/plot_simulate_somato.py:65: RuntimeWarning: Something went wrong in the data-driven estimation of the data rank as it exceeds the theoretical rank from the info (306 > 64). Consider setting rank to "auto" or setting it explicitly as an integer.

cov = mne.compute_covariance(epochs)

Reducing data rank from 306 -> 306

Estimating covariance using EMPIRICAL

Done.

Number of samples used : 12432

[done]

Converting forward solution to surface orientation

No patch info available. The standard source space normals will be employed in the rotation to the local surface coordinates....

Converting to surface-based source orientations...

[done]

Computing inverse operator with 306 channels.

306 out of 306 channels remain after picking

Selected 306 channels

Creating the depth weighting matrix...

204 planar channels

limit = 7615/8155 = 10.004172

scale = 5.17919e-08 exp = 0.8

Applying loose dipole orientations to surface source spaces: 0.2

Whitening the forward solution.

Computing rank from covariance with rank=None

Using tolerance 2e-12 (2.2e-16 eps * 306 dim * 29 max singular value)

Estimated rank (mag + grad): 64

MEG: rank 64 computed from 306 data channels with 0 projectors

Setting small MEG eigenvalues to zero (without PCA)

Creating the source covariance matrix

Adjusting source covariance matrix.

Computing SVD of whitened and weighted lead field matrix.

largest singular value = 2.42284

scaling factor to adjust the trace = 3.86104e+18 (nchan = 306 nzero = 242)

There are several methods to do source reconstruction. Some of the methods

such as MNE are distributed source methods whereas dipole fitting will

estimate the location and amplitude of a single current dipole. At the

moment, we do not offer explicit recommendations on which source

reconstruction technique is best for HNN. However, we do want our users

to note that the dipole currents simulated with HNN are assumed to be normal

to the cortical surface. Hence, using the option pick_ori='normal' is

appropriate.

snr = 3.

lambda2 = 1. / snr ** 2

evoked = epochs.average()

stc = apply_inverse(evoked, inv, lambda2, method='MNE',

pick_ori="normal", return_residual=False,

verbose=True)

Out:

Preparing the inverse operator for use...

Scaled noise and source covariance from nave = 1 to nave = 111

Created the regularized inverter

The projection vectors do not apply to these channels.

Created the whitener using a noise covariance matrix with rank 64 (242 small eigenvalues omitted)

Applying inverse operator to "1"...

Picked 306 channels from the data

Computing inverse...

Eigenleads need to be weighted ...

Computing residual...

Explained 86.1% variance

[done]

To extract the primary response in primary somatosensory cortex (S1), we create a label for the postcentral gyrus (S1) in source-space

hemi = 'rh'

label_tag = 'G_postcentral'

label_s1 = mne.read_labels_from_annot(subject, parc='aparc.a2009s', hemi=hemi,

regexp=label_tag,

subjects_dir=subjects_dir)[0]

Out:

Reading labels from parcellation...

read 1 labels from /home/circleci/mne_data/MNE-somato-data/derivatives/freesurfer/subjects/01/label/rh.aparc.a2009s.annot

Visualizing the distributed S1 activation in reference to the geometric

structure of the cortex (i.e., plotted on a structural MRI) can help us

figure out how to orient the dipole. Note that in the HNN framework,

positive and negative deflections of a current dipole source correspond to

upwards (from deep to superficial) and downwards (from superficial to deep)

current flow, respectively. Uncomment the following code to open an

interactive 3D render of the brain and its surface activation (requires the

pyvista python library). You should get 2 plots, the first showing the

post-central gyrus label from which the dipole time course was extracted and

the second showing MNE activation at 0.040 sec that resemble the following

images.

'''

Brain = mne.viz.get_brain_class()

brain_label = Brain(subject, hemi, 'white', subjects_dir=subjects_dir)

brain_label.add_label(label_s1, color='green', alpha=0.9)

stc_label = stc.in_label(label_s1)

brain = stc_label.plot(subjects_dir=subjects_dir, hemi=hemi, surface='white',

view_layout='horizontal', initial_time=0.04,

backend='pyvista')

'''

Out:

"\nBrain = mne.viz.get_brain_class()\nbrain_label = Brain(subject, hemi, 'white', subjects_dir=subjects_dir)\nbrain_label.add_label(label_s1, color='green', alpha=0.9)\nstc_label = stc.in_label(label_s1)\nbrain = stc_label.plot(subjects_dir=subjects_dir, hemi=hemi, surface='white',\n view_layout='horizontal', initial_time=0.04,\n backend='pyvista')\n"

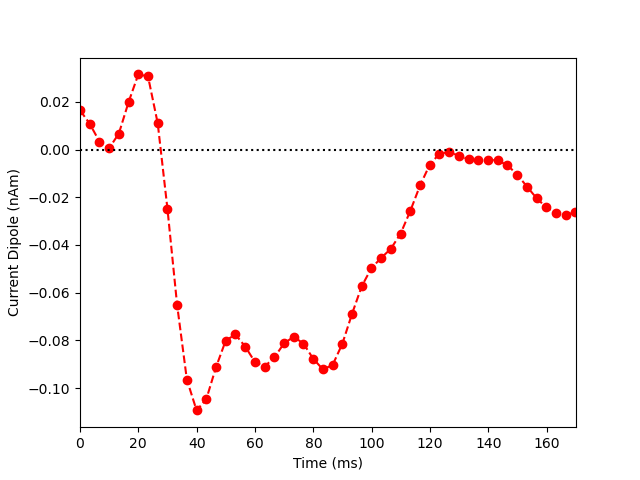

Now we extract the representative time course of dipole activation in our

labeled brain region using mode='pca_flip' (see this MNE-python

example for more details). Note that the most prominent component of the

median nerve response occurs in the posterior wall of the central sulcus at

~0.040 sec. Since the dipolar activity here is negative, we orient the

extracted waveform so that the deflection at ~0.040 sec is pointed downwards.

Thus, the ~0.040 sec deflection corresponds to current flow traveling from

superficial to deep layers of cortex.

flip_data = stc.extract_label_time_course(label_s1, inv['src'],

mode='pca_flip')

dipole_tc = -flip_data[0] * 1e9

plt.figure()

plt.plot(1e3 * stc.times, dipole_tc, 'ro--')

plt.xlabel('Time (ms)')

plt.ylabel('Current Dipole (nAm)')

plt.xlim((0, 170))

plt.axhline(0, c='k', ls=':')

plt.show()

Out:

Extracting time courses for 1 labels (mode: pca_flip)

Now, let us try to simulate the same with hnn-core. We read in the

network parameters from N20.json and instantiate the network.

import hnn_core

from hnn_core import simulate_dipole, read_params, Network, JoblibBackend

from hnn_core import average_dipoles

hnn_core_root = op.dirname(hnn_core.__file__)

params_fname = op.join(hnn_core_root, 'param', 'N20.json')

params = read_params(params_fname)

net = Network(params)

To simulate the source of the median nerve evoked response, we add a

sequence of synchronous evoked drives: 1 proximal, 2 distal, and 1 final

proximal drive. In order to understand the physiological implications of

proximal and distal drive as well as the general process used to articulate

a sequence of exogenous drive for simulating evoked responses, see the

HNN ERP tutorial. Note that setting sync_within_trial=True creates

drives with synchronous input (arriving to and transmitted by hypothetical

granular cells at the center of the network) to all pyramidal and basket

cells that receive distal drive. Note that granule cells are not explicitly

modelled within HNN.

# Early proximal drive

weights_ampa_p = {'L2_basket': 0.0036, 'L2_pyramidal': 0.0039,

'L5_basket': 0.0019, 'L5_pyramidal': 0.0020}

weights_nmda_p = {'L2_basket': 0.0029, 'L2_pyramidal': 0.0005,

'L5_basket': 0.0030, 'L5_pyramidal': 0.0019}

synaptic_delays_p = {'L2_basket': 0.1, 'L2_pyramidal': 0.1,

'L5_basket': 1.0, 'L5_pyramidal': 1.0}

net.add_evoked_drive(

'evprox1', mu=21., sigma=4., numspikes=1, sync_within_trial=True,

weights_ampa=weights_ampa_p, weights_nmda=weights_nmda_p,

location='proximal', synaptic_delays=synaptic_delays_p, seedcore=6)

# Late proximal drive

weights_ampa_p = {'L2_basket': 0.003, 'L2_pyramidal': 0.0039,

'L5_basket': 0.004, 'L5_pyramidal': 0.0020}

weights_nmda_p = {'L2_basket': 0.001, 'L2_pyramidal': 0.0005,

'L5_basket': 0.002, 'L5_pyramidal': 0.0020}

synaptic_delays_p = {'L2_basket': 0.1, 'L2_pyramidal': 0.1,

'L5_basket': 1.0, 'L5_pyramidal': 1.0}

net.add_evoked_drive(

'evprox2', mu=134., sigma=4.5, numspikes=1, sync_within_trial=True,

weights_ampa=weights_ampa_p, weights_nmda=weights_nmda_p,

location='proximal', synaptic_delays=synaptic_delays_p, seedcore=6)

# Early distal drive

weights_ampa_d = {'L2_basket': 0.0043, 'L2_pyramidal': 0.0032,

'L5_pyramidal': 0.0009}

weights_nmda_d = {'L2_basket': 0.0029, 'L2_pyramidal': 0.0051,

'L5_pyramidal': 0.0010}

synaptic_delays_d = {'L2_basket': 0.1, 'L2_pyramidal': 0.1,

'L5_pyramidal': 0.1}

net.add_evoked_drive(

'evdist1', mu=32., sigma=2.5, numspikes=1, sync_within_trial=True,

weights_ampa=weights_ampa_d, weights_nmda=weights_nmda_d,

location='distal', synaptic_delays=synaptic_delays_d, seedcore=6)

# Late distal drive

weights_ampa_d = {'L2_basket': 0.0041, 'L2_pyramidal': 0.0019,

'L5_pyramidal': 0.0018}

weights_nmda_d = {'L2_basket': 0.0032, 'L2_pyramidal': 0.0018,

'L5_pyramidal': 0.0017}

synaptic_delays_d = {'L2_basket': 0.1, 'L2_pyramidal': 0.1,

'L5_pyramidal': 0.1}

net.add_evoked_drive(

'evdist2', mu=84., sigma=4.5, numspikes=1, sync_within_trial=True,

weights_ampa=weights_ampa_d, weights_nmda=weights_nmda_d,

location='distal', synaptic_delays=synaptic_delays_d, seedcore=2)

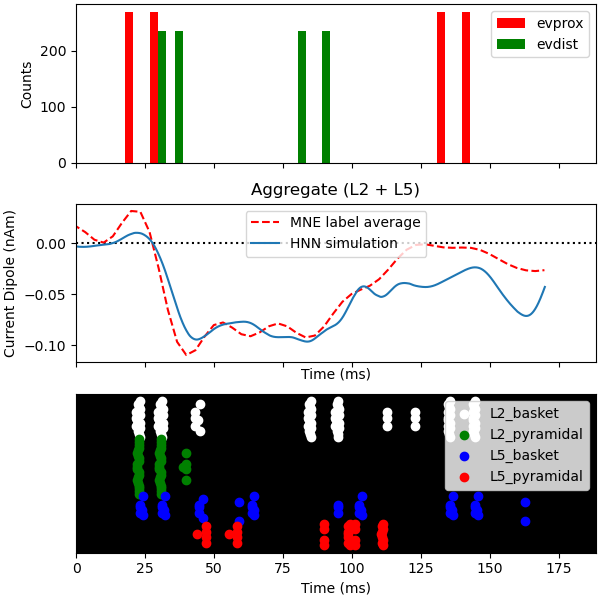

Now we run the simulation over 2 trials so that we can plot the average

aggregate dipole. For a better match to the empirical waveform, set

n_trials to be >=25.

Out:

joblib will run over 2 jobs

Since the model is a reduced representation of the larger network contributing to the response, the model response is noisier than it would be in the net activity from a larger network where these effects are averaged out, and the dipole amplitude is smaller than the recorded data. The post-processing steps of smoothing and scaling the simulated dipole response allow us to more accurately approximate the true signal responsible for the recorded macroscopic evoked response 1, 2.

dpl_smooth_win = 20

dpl_scalefctr = 12

for dpl in dpls:

dpl.smooth(dpl_smooth_win)

dpl.scale(dpl_scalefctr)

Finally, we plot the driving spike histogram, empirical and simulated median nerve evoked response waveforms, and output spike histogram.

fig, axes = plt.subplots(3, 1, sharex=True, figsize=(6, 6),

constrained_layout=True)

net.cell_response.plot_spikes_hist(ax=axes[0],

spike_types=['evprox', 'evdist'],

show=False)

axes[1].axhline(0, c='k', ls=':', label='_nolegend_')

axes[1].plot(1e3 * stc.times, dipole_tc, 'r--')

average_dipoles(dpls).plot(ax=axes[1], show=False)

axes[1].legend(['MNE label average', 'HNN simulation'])

axes[1].set_ylabel('Current Dipole (nAm)')

net.cell_response.plot_spikes_raster(ax=axes[2])

Out:

<Figure size 600x600 with 3 Axes>

References¶

- 1(1,2)

Jones, S. R., Pritchett, D. L., Stufflebeam, S. M., Hämäläinen, M. & Moore, C. I. Neural correlates of tactile detection: a combined magnetoencephalography and biophysically based computational modeling study. J. Neurosci. 27, 10751–10764 (2007).

- 2(1,2)

Neymotin SA, Daniels DS, Caldwell B, McDougal RA, Carnevale NT, Jas M, Moore CI, Hines ML, Hämäläinen M, Jones SR. Human Neocortical Neurosolver (HNN), a new software tool for interpreting the cellular and network origin of human MEG/EEG data. eLife 9, e51214 (2020). https://doi.org/10.7554/eLife.51214

Total running time of the script: ( 1 minutes 19.805 seconds)